The Miniloan application is part of the IBM Operational Decision Management product and tutorial. The goal of this hybrid AI demonstration is to illustrate how unstructured query in natural language can be decomposed using a LLM to identify parameters to call the loan application decision service and how to do it with the Owl Framework.

The end-user's questions that are tested and validated are:

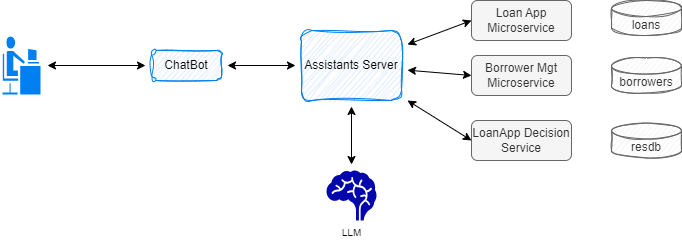

The high level the architecture for this demonstration looks like in the figure below:

To make it easier the loanApp and client the repositories are mockup and loaded in memory.

Recall that the rules implemented validate the data on the loan and borrower attributes:

Those rule evaluations are key to get reliable answer even if we have to process unstructured text.

For more detail on the OWL Core components design see this note.

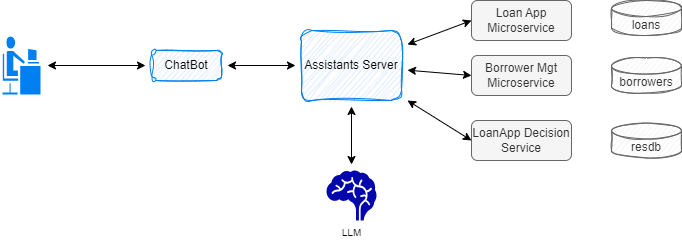

As of now the owl-backend is a container image, deployable as a unit and ables to mount the python code of the demonstration to run the different orchestration logic. The diagram illustrates those concepts to run on a local machine

For production deployment the owl-backend code and the specific logic may be packaged in its own container.

cp ../demo_tmpl/.env_tmpl .env

IBM-MiniLoan-demo/deployment/local/ docker compose up -d

python non_regression_tests.py

The script validates:

The User interface may be used to do a live demonstration:

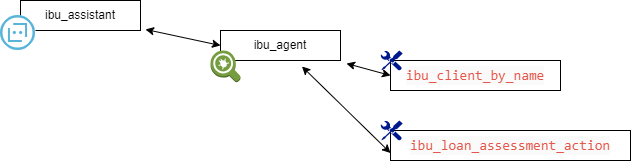

The following figure is using the ibu_assistant_limited assistant to try to answer the question. The tool calling to access the client data is successful but the LLM generates hallucinations:

This section explains how to use the OWL framework to support the demonstration.

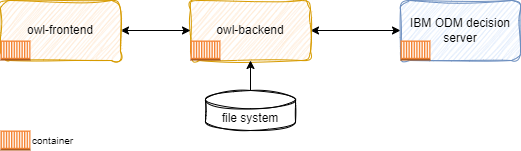

An assistant supports a customer representative to answer questions and queries about a loan. Assistant entity uses an agent, which is linked to the LLM to call via API and the tool definitions.

ibu_assistant athena.llm.assistants.BaseAssistant.BaseAssistant A default assistant that uses LLM, and local defined tools like get borrower, and next best action IBU Loan App assistant ibu_agent

The assistant without ODM decision service function is:

ibu_assistant_limited athena.llm.assistants.BaseAssistant.BaseAssistant A default assistant that uses LLM, and local defined tools like get borrower, without decision service IBU Loan App assistant ibu_agent_limited

ibu_agent ibu_agent openai based agent with IBU loan app prompt and tools athena.llm.agents.base_chain_agent.OwlAgent gpt-3.5-turbo-0125 langchain_openai.ChatOpenAI ibu_loan_prompt - ibu_client_by_name - ibu_loan_assessment_action

ibu_client_by_name get_client_by_name

ibu_loan_assessment_action ibu.llm.tools.client_tools assess_loan_app_with_decision

Assistants, agents and prompts are declarative. Tools need declaration but some code to do the integration.

In case you need to work on the current demonstration, and run some of the test cases, this section addresses what needs to be done to run on you own local laptop (or a VM on the cloud) with a Docker engine. Currently, in development mode, the source code of the core framework is needed so you need to clone the github repository ( in the future, we may build a module that should be installable via pip install ).

<span for example in $HOME/Code/Athena git clone https://github.com/AthenaDecisionSystems/athena-owl-core

pip install -r tests/requirements.txt

pytest -s tests/ut

For integration tests, you need to start the backend using Docker Compose as explained before, then run all the integration tests via the command:

pytest -s tests/it

The previous section demonstrates the Yaml manifests for the declaration of the assistant, agent and tools. Each demonstration will have different tools. This section explains the tools implemented in this demonstration which may help for future development.

Each demonstration is its own folder and includes mostly the same structure:

| Folder | Intent |

|---|---|

| decisions/persistence | Include the resDB ruleApps from IBM ODM |

| deployment | Infrastructure as code and other deployment manifests |

| e2e | end to end testing for scenario based testing with all components deployed |

| ibu_backend/src | The code for the demo |

| ibu_backend/openapi | Any openAPI documents that could be used to generate Pydentic objects. ODM REST ruleset openAPI is used. |

| ibu_backend/config | The different configuration files for the Agentic solution entities |

| ibu_backend/tests | Unit tests (ut folder) and integration tests (it folder) |

The tool function coding is done in one class, the client_tools.py. This class implements the different functions to be used as part of the LLM orchestration, and also the factory to build the tool for the LangChain framework.

Taking the tool definition below

ibu_loan_assessment_action ibu.llm.tools.client_tools assess_loan_app_with_decision

The module ibu.llm.tools.client_tools includes the function assess_loan_app_with_decision that exposes the parameters the LLM can extract from the unstructured text and gives back to the langchain chains to perform the tool calling. The code prepares the payload to the ODM service.

It is clear that the unstructured query is key to get good tool calling. It is possible to add a ODM rule set for data gathering. Any required data attributes needed to decide on the loan application may be evaluate from what the LLM was able to extract. LLM can build json payload conforms to a schema. Adding an agent to look at the data of the json, will help asking the question to the human to get all the missing data.